Lessons Non-American Students Are Taught About America (25 Pics)

An AskReddit thread asked how American history is taught in other countries and people from around the world responded. There’s nothing more American than assuming non-Americans should know anything about our history. Most Americans don’t know much about our own history, to begin with. It’s never too late to learn, I guess.

Non-US citizens of Reddit, what do your schools teach you about the United States?

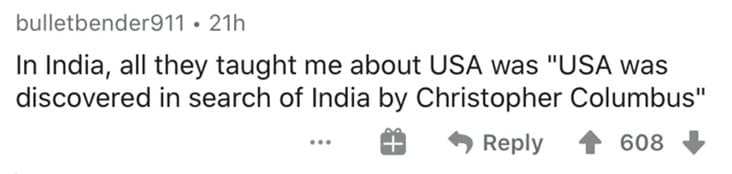

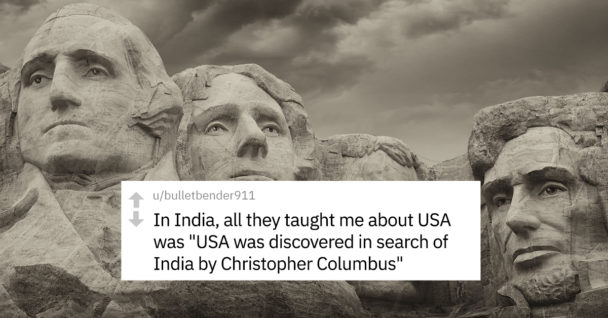

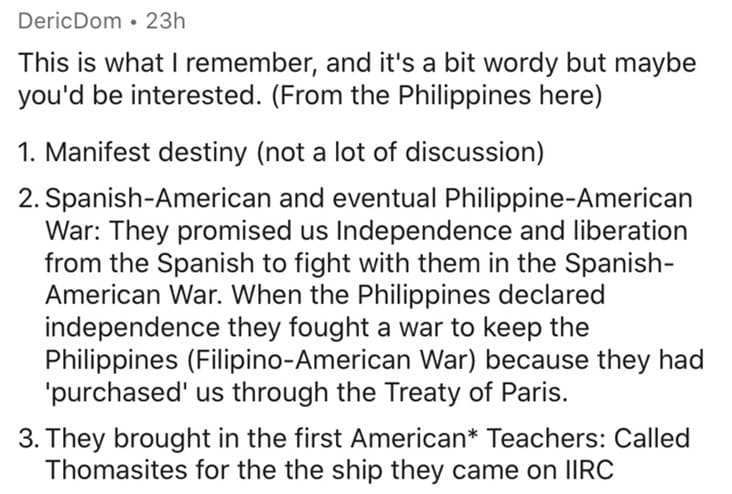

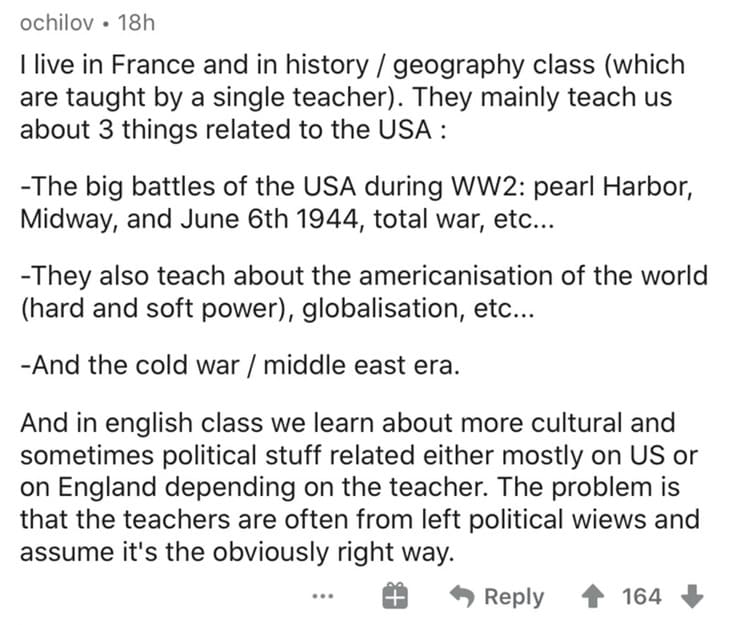

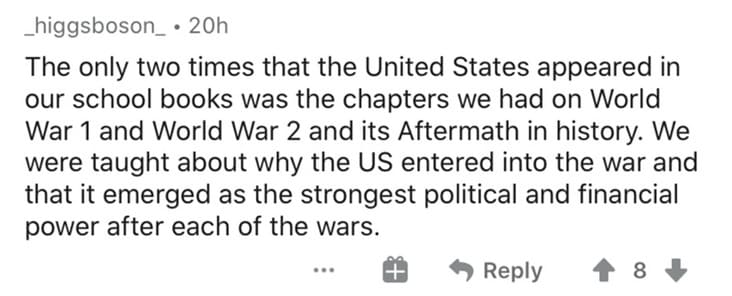

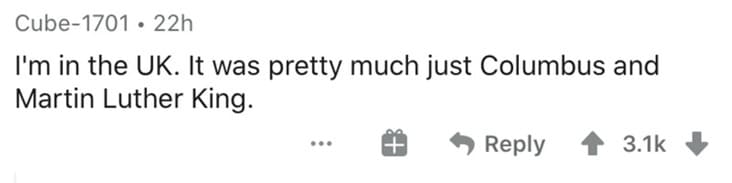

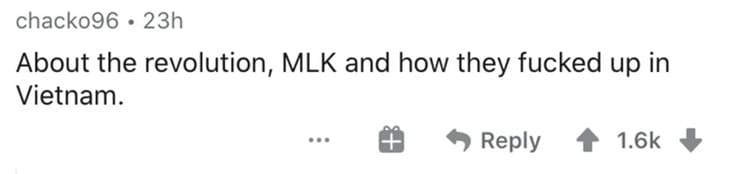

1.

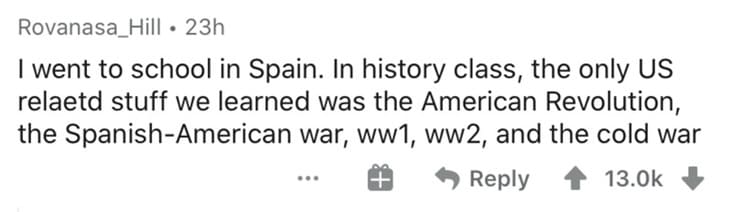

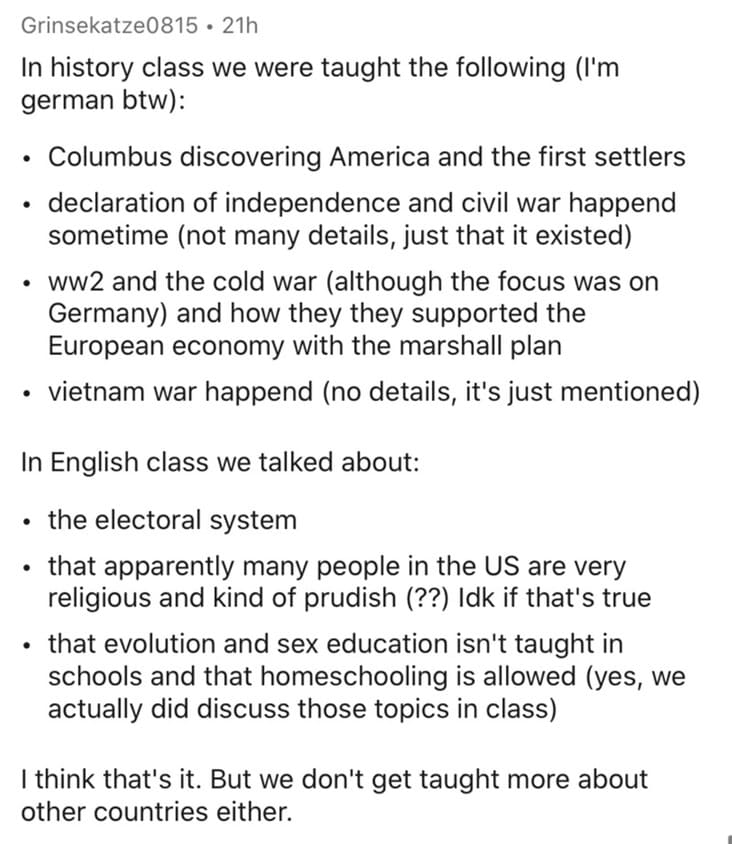

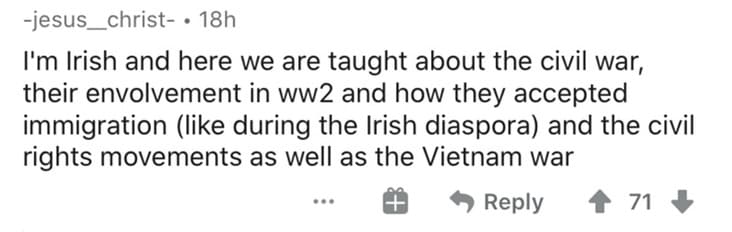

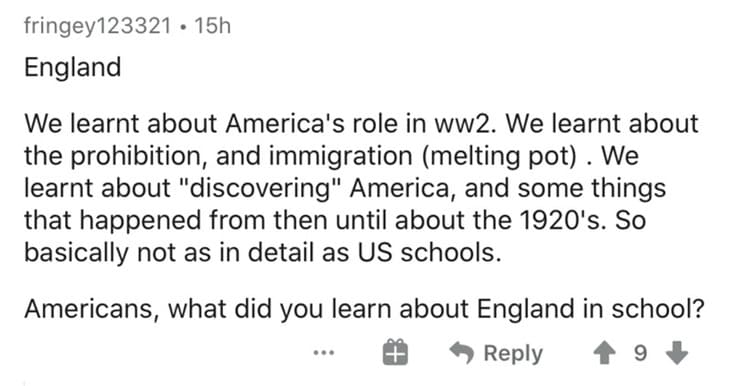

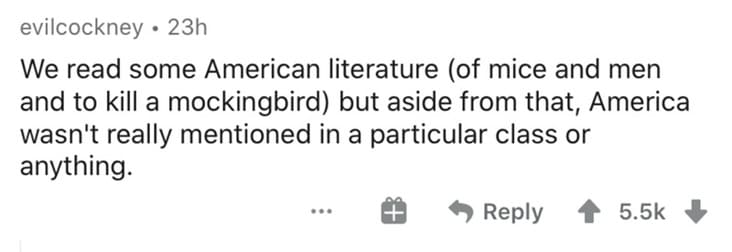

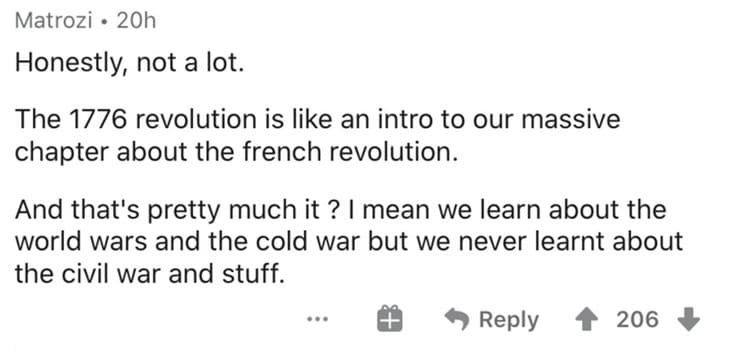

2.

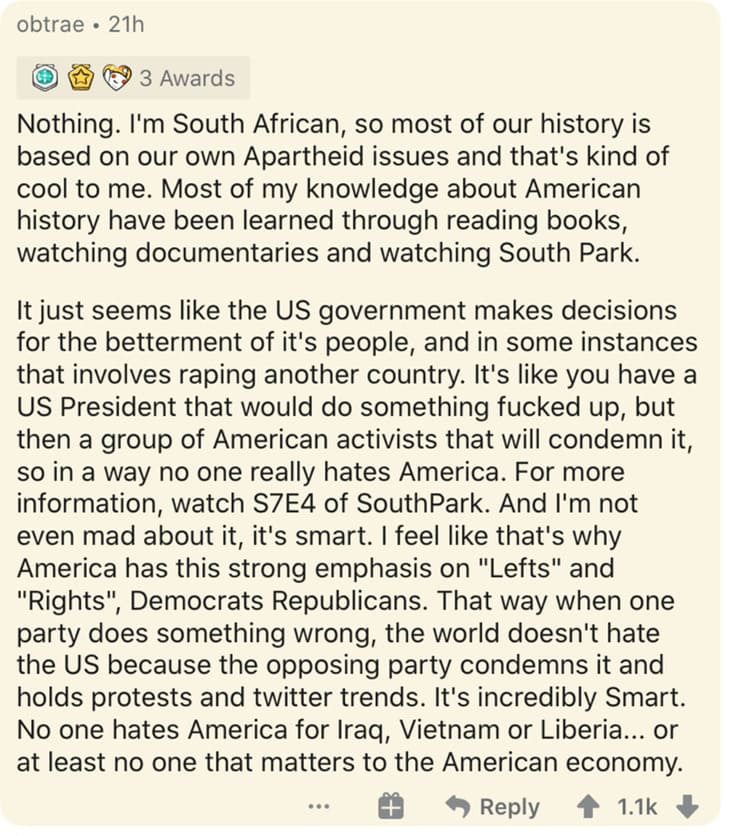

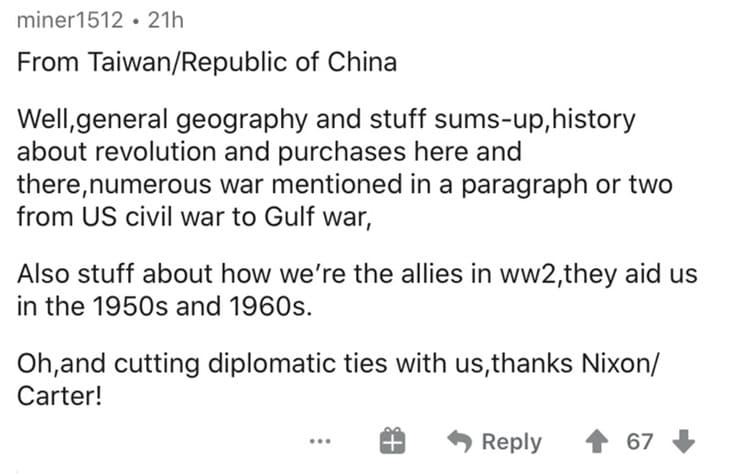

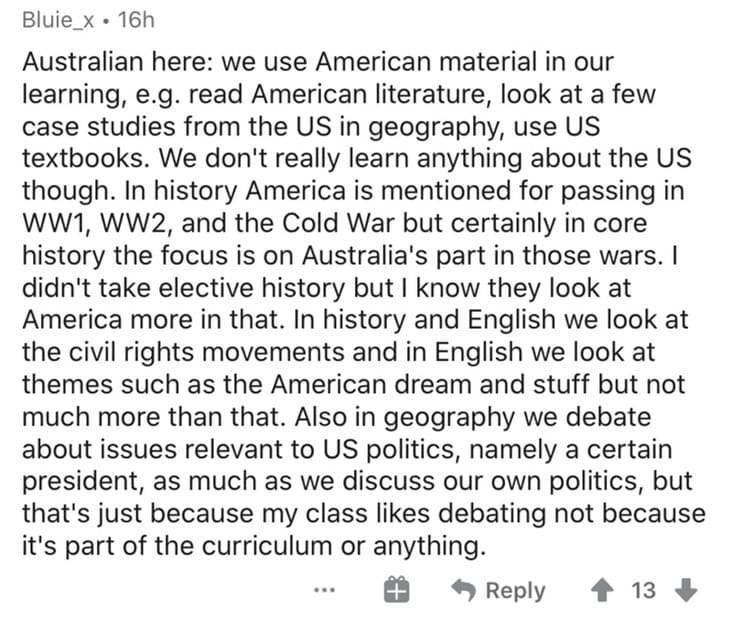

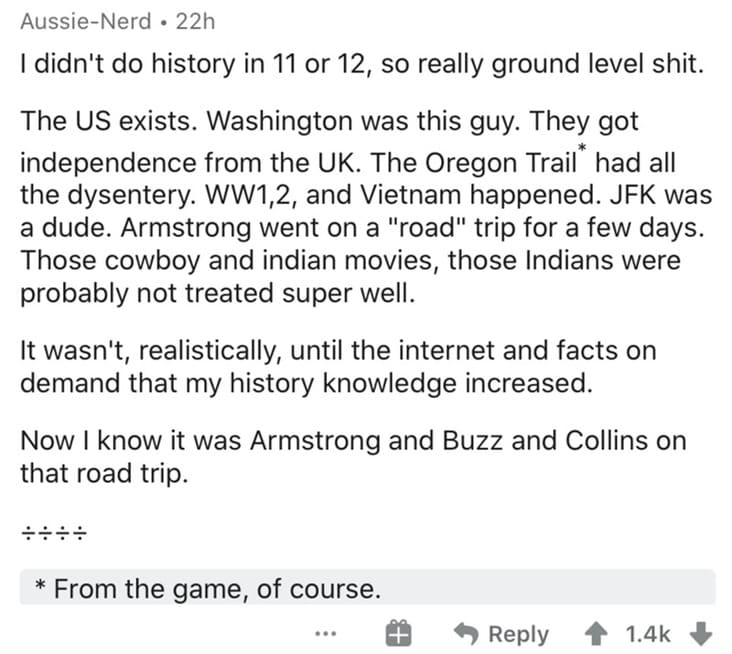

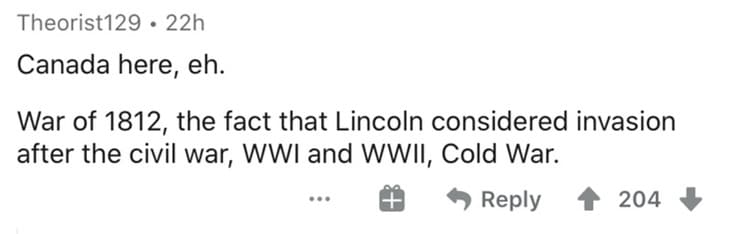

3.

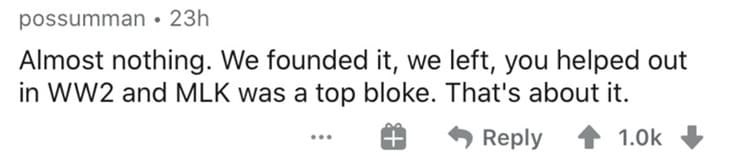

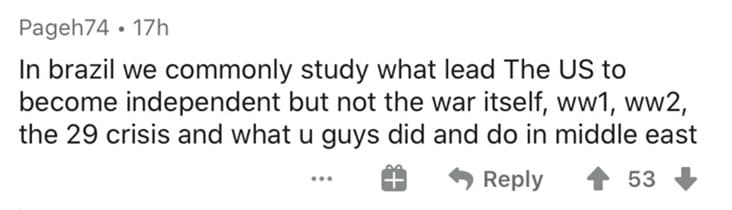

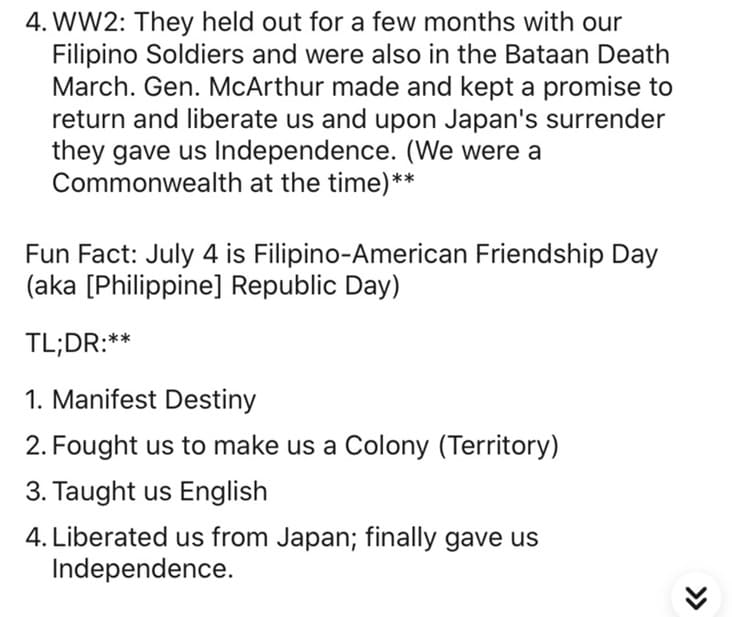

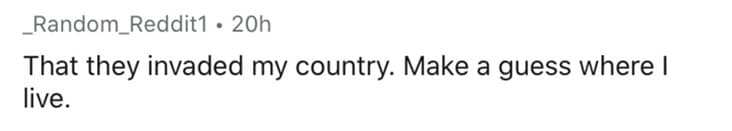

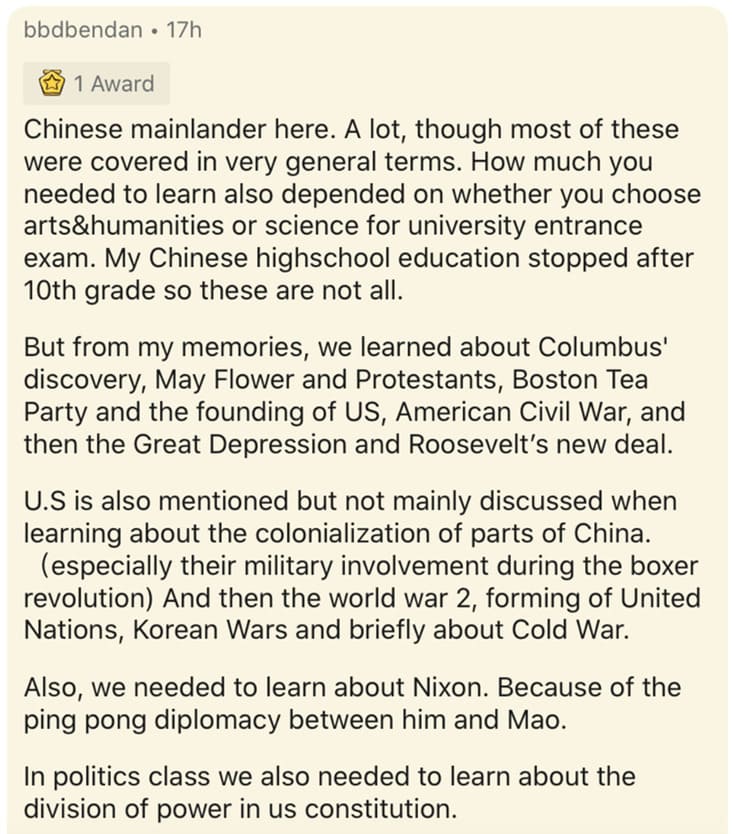

4.

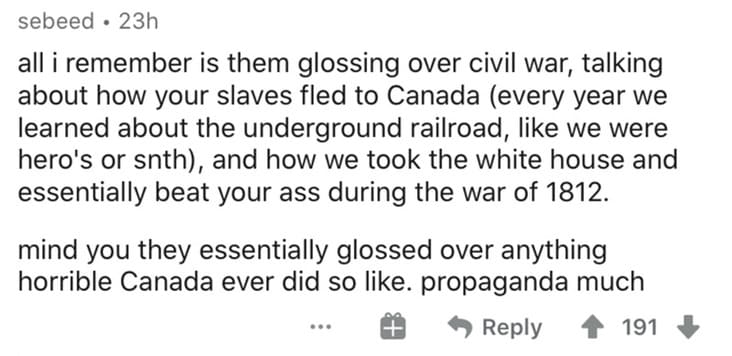

5.

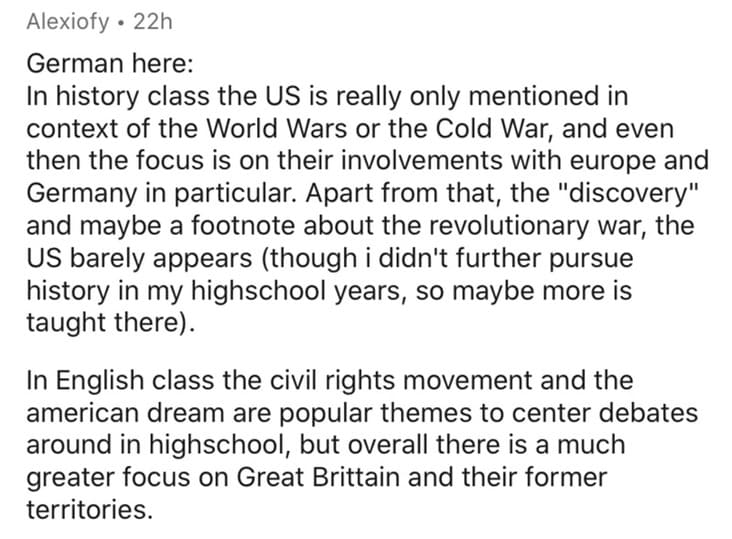

6.

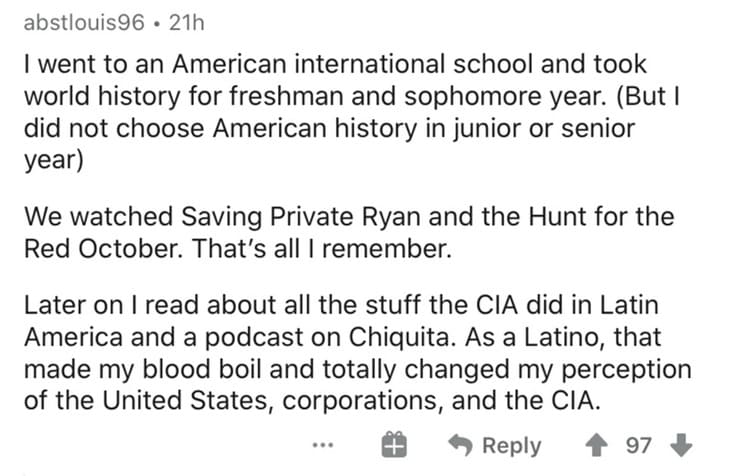

7.

8.

9.

10.

11.

12.

13.

14.

15.

16.

17.

18.

19.

20.

21.

22.

23.

24.

25.