Twitter Users Are Freaked Out That The Photo Preview Seems To Always Pick White People

It’s well known that Silicon Valley has had its fair share of accusations of racial bias. Now Twitter is under fire for the platform’s photo-cropping algorithm which some allege has its own racial bias.

Last week, Twitter user Tony Arcieri (@bascule) started playing around with how Twitter presents its image previews and found a disturbing trend they shared with the timeline:

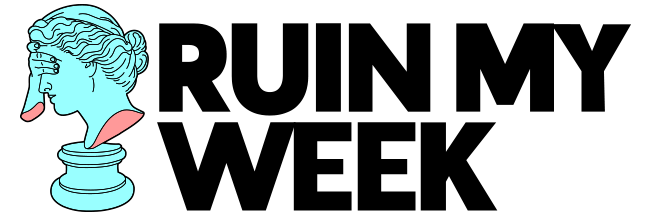

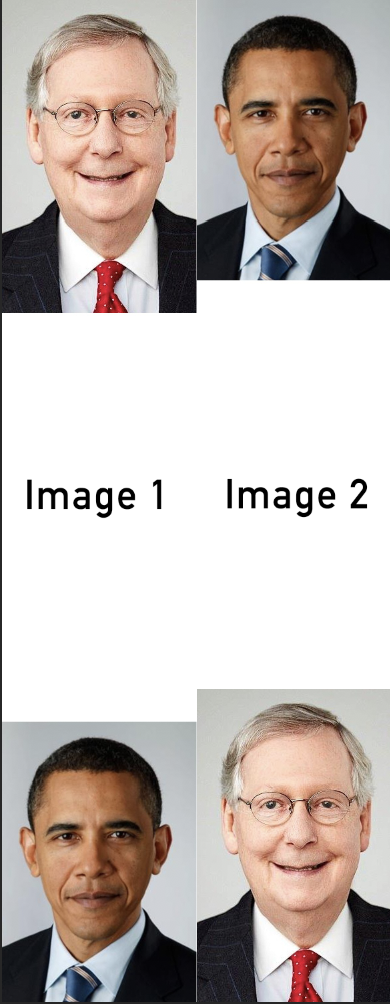

Trying a horrible experiment…

Which will the Twitter algorithm pick: Mitch McConnell or Barack Obama? pic.twitter.com/bR1GRyCkia

— Tony “Abolish (Pol)ICE” Arcieri 🦀 (@bascule) September 19, 2020

They took two photos that were the same size and flipped them. One had Senate Majority Leader Mitch McConnell at the top of one image and at the bottom of the other. President Barack Obama was opposite McConnell.

When the images were tweeted, Twitter’s algorithm chose to showcase both McConnell images. Arcieri added a thread to the tweet where they experimented with other aspects of the images to see if the auto-preview algorithm was being consistent.

“It’s the red tie! Clearly the algorithm has a preference for red ties!”

Well let’s see… pic.twitter.com/l7qySd5sRW

— Tony “Abolish (Pol)ICE” Arcieri 🦀 (@bascule) September 19, 2020

The two men lined up once Arcieri inverted the photo colors.

Let’s try inverting the colors… (h/t @KnabeWolf) pic.twitter.com/5hW4owmej2

— Tony “Abolish (Pol)ICE” Arcieri 🦀 (@bascule) September 19, 2020

Other users picked up on the tweet and started experimenting with the auto-preview on their own.

help does twitter only focus on the white dude?? pic.twitter.com/AYZvOa5iMb

— dhara ☕ (@onyowalkman) September 21, 2020

One user found the algorithm prefers images of President Obama when they are lighter.

These images in particular, comparing low contrast to high contrast Obama, are much more telling. The algorithm seems to prefer a lighter Obama over a darker one:https://t.co/8EAYnYcRs3

— Tony “Abolish (Pol)ICE” Arcieri 🦀 (@bascule) September 21, 2020

The tweet went viral, with nearly 200k likes and retweets from notable Twitter users like Silicon Valley star Kumail Nanjiani.

Wow. This would be shocking if it wasn’t absolutely predictable. https://t.co/S0IxZ9vwek

— Kumail Nanjiani (@kumailn) September 20, 2020

Twitter leaders are now investigating why this was happening, with many people stating their disappointment in how the platform failed people of color and intention to fix the technology.

thanks to everyone who raised this. we tested for bias before shipping the model and didn’t find evidence of racial or gender bias in our testing, but it’s clear that we’ve got more analysis to do. we’ll open source our work so others can review and replicate. https://t.co/E6sZV3xboH

— liz kelley (@lizkelley) September 20, 2020

This is a very important question. To address it, we did analysis on our model when we shipped it, but needs continuous improvement.

Love this public, open, and rigorous test — and eager to learn from this. https://t.co/E8Y71qSLXa

— Parag Agrawal (@paraga) September 20, 2020